Beyond benchmarks: Towards robust artificial intelligence bone segmentation in socio-technical systems

Abstract

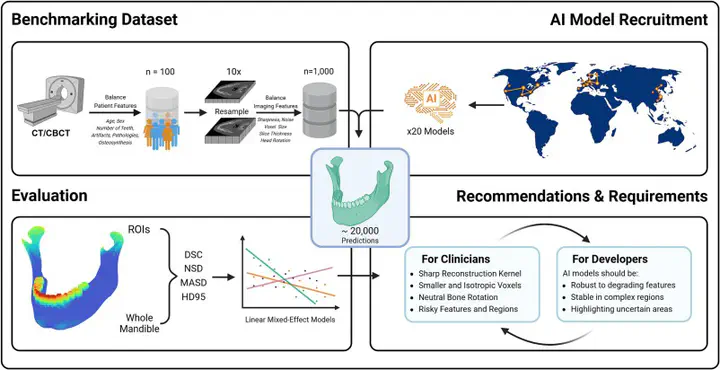

Despite the advances in automated medical image segmentation, AI models still underperform in various clinical settings, posing challenges for integration into real-world workflows. In this pre-registered prospective multicenter evaluation, we analyzed 20 state-of-the-art mandibular segmentation models across 19,218 segmentations of 1,000 clinically resampled CT/CBCT scans. Our results suggest that for a given model, segmentation accuracy can vary by up to 25% in Dice score as socio-technical factors such as voxel size, bone orientation, and patient conditions (e.g., osteosynthesis or pathology) shift from favorable to adverse. Higher sharpness, isotropic smaller voxels, and neutral orientation significantly improved results, while metallic osteosynthesis and anatomical complexity led to significant degradation. Our findings challenge the common view of AI models as “plug-and-play” tools and suggest evidence-based optimization recommendations for both clinicians and developers. This will in turn boost the integration of AI segmentation tools in routine healthcare.